Understanding the AI Diffusion Framework

In a new perspective, I explain and analyze the AI Diffusion Framework—what it does, how it works, its rationale, why it was needed, why China can't easily fill the void, and some thoughts on model weight controls.

This is a cross-post of my X thread on my paper "Understanding the Artificial Intelligence Diffusion Framework."

In a new perspective, I explain and analyze the AI Diffusion Framework—what it does, how it works, its rationale, why it was needed, why China can't easily fill the void, and some thoughts on model weight controls.

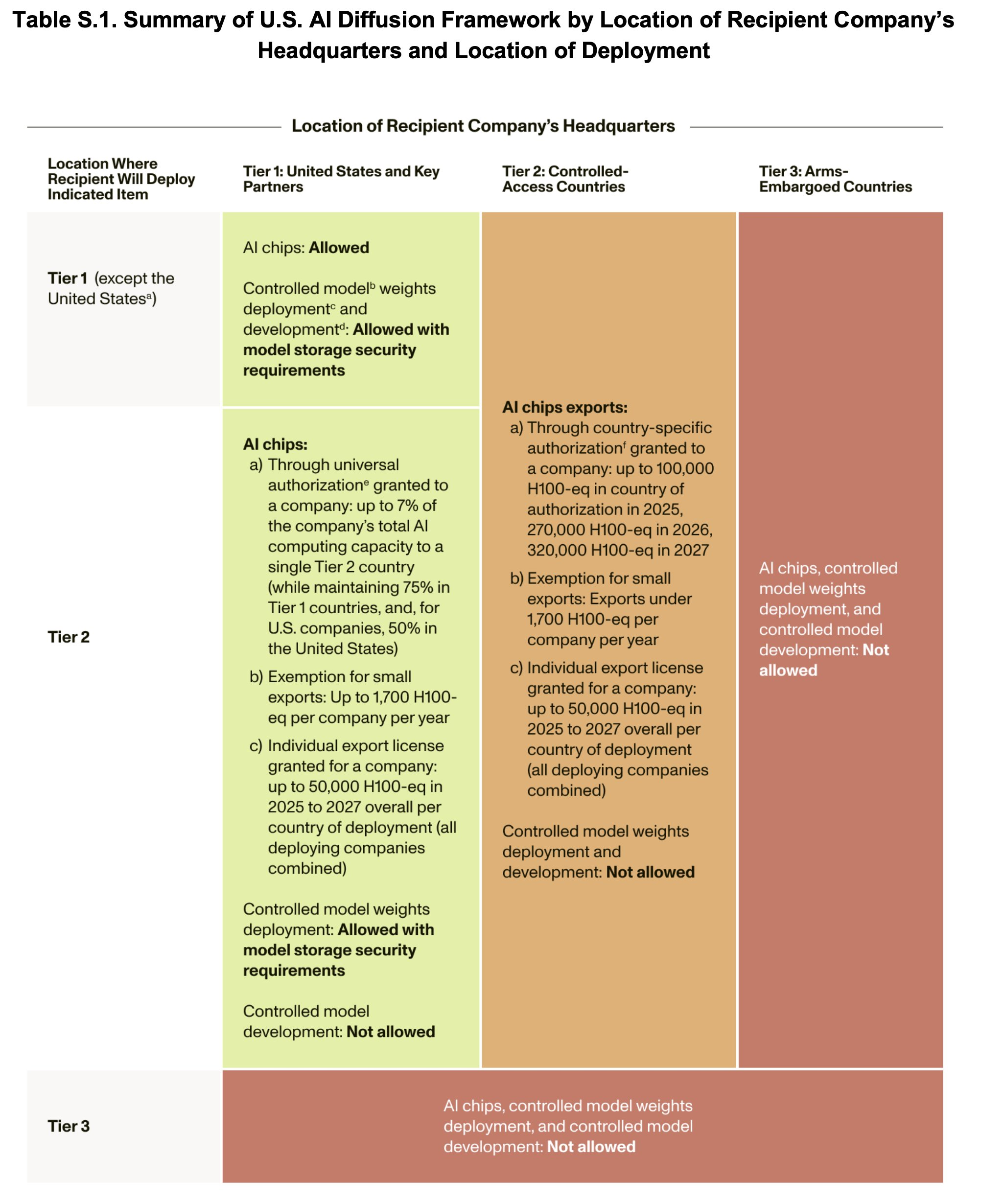

This table gives the best overview: The framework applies rules based on company HQ location and export destination—covering both advanced AI chips and certain model weights.

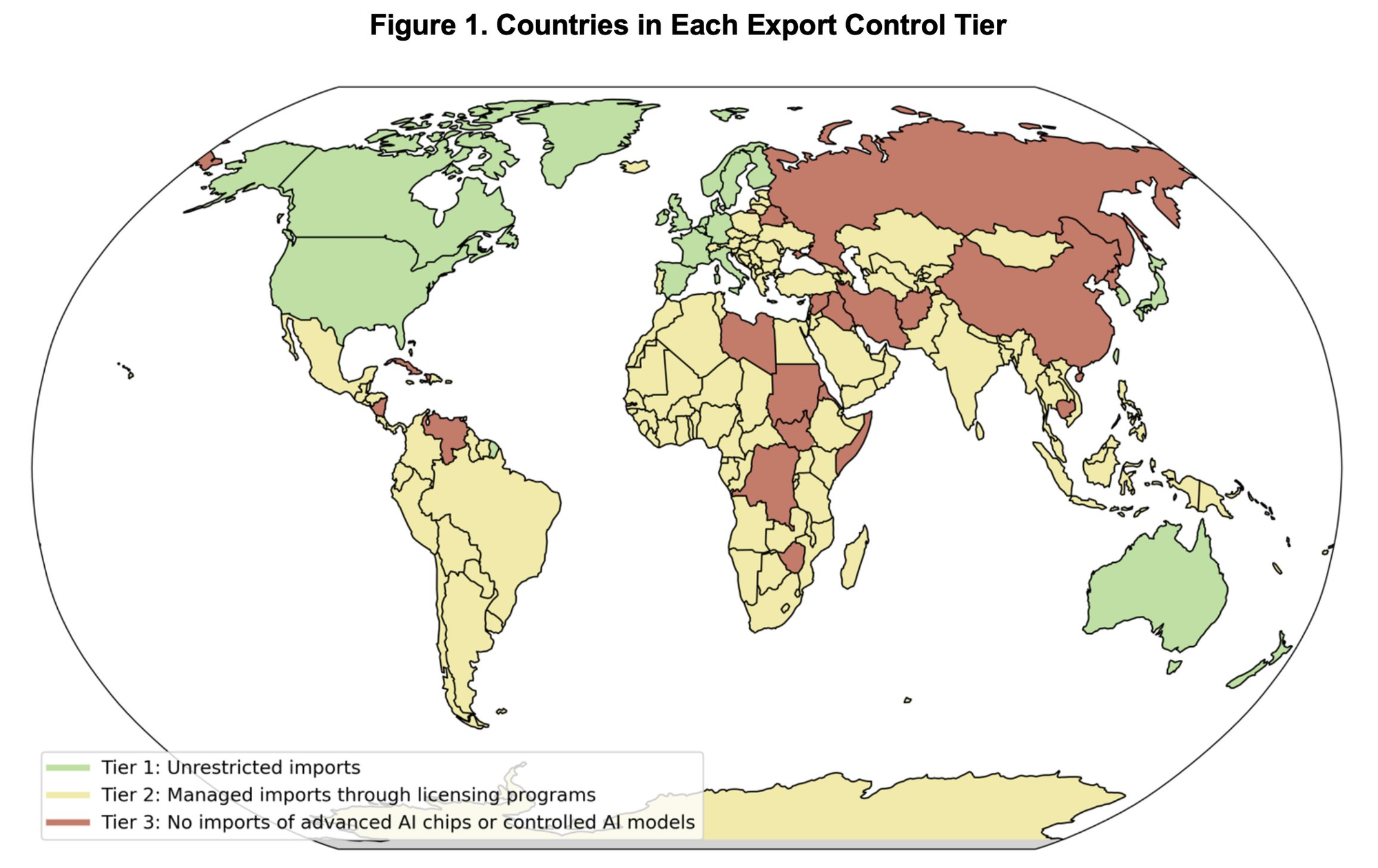

Three country tiers determine access rights and security requirements: Countries in Tier 1 have no import restrictions. Tier 2 countries can receive exports only through authorized companies or obtained licenses, while Tier 3 countries face continued restrictions.

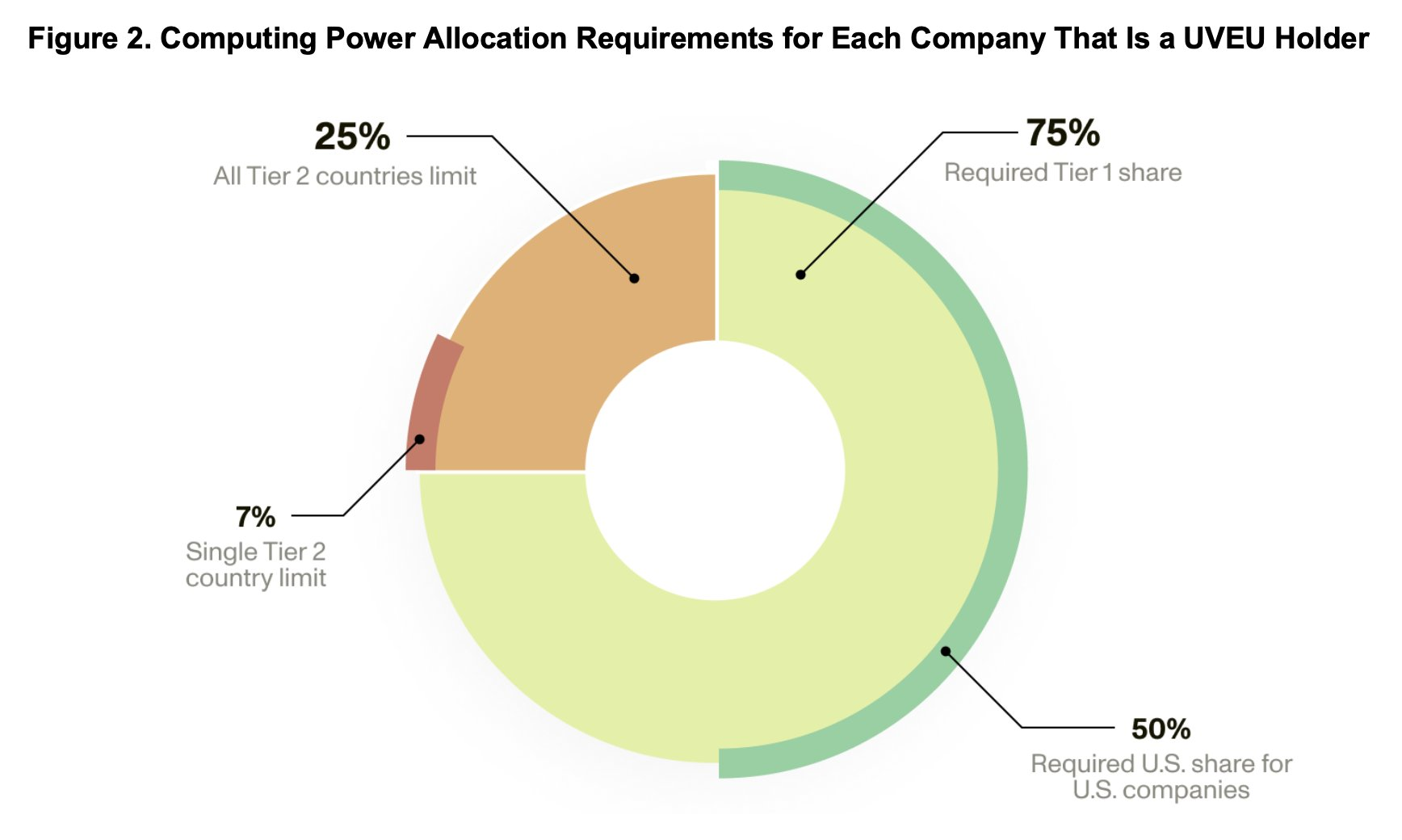

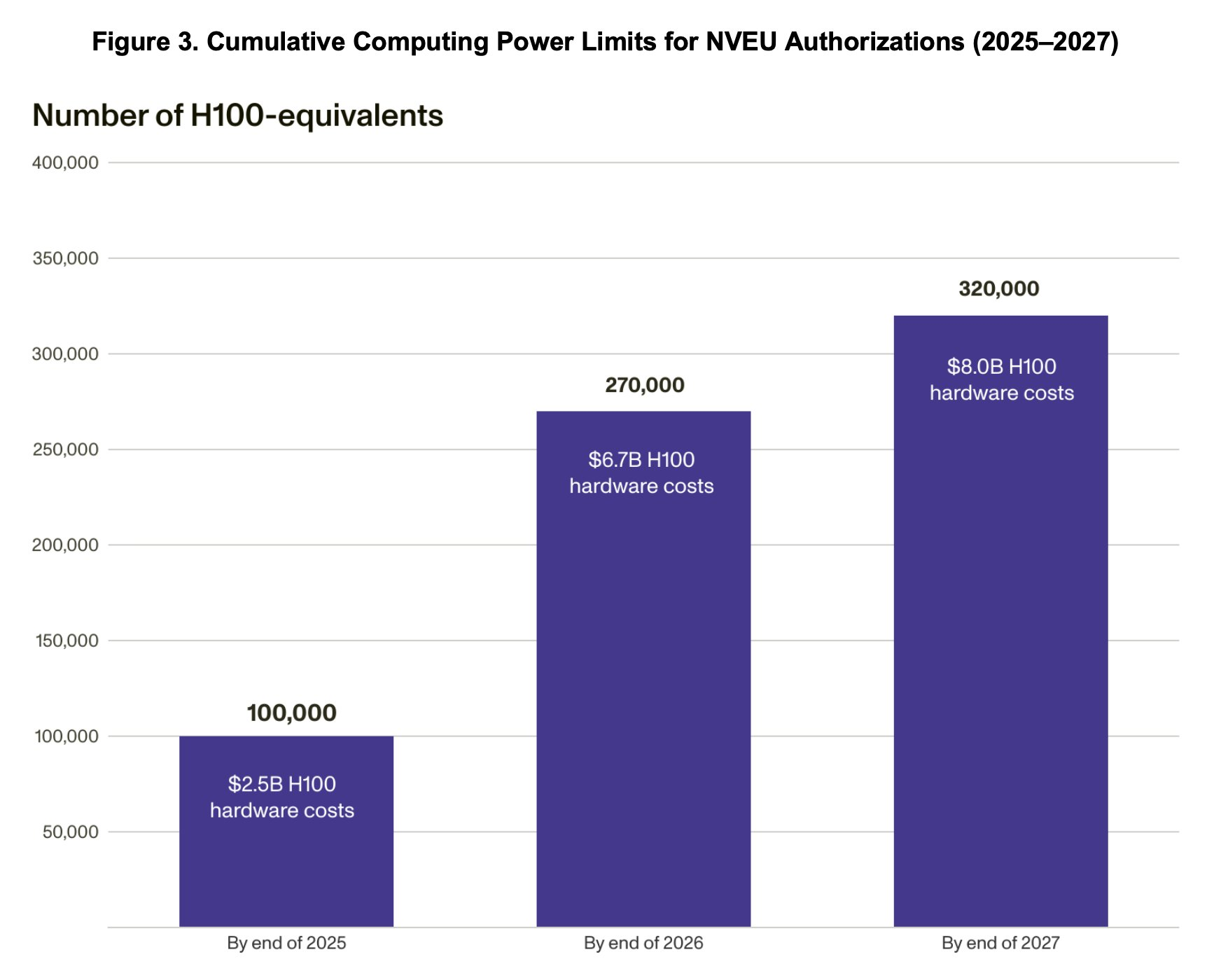

Tier 1 companies can deploy globally through universal authorization, but must keep 75% of compute in Tier 1 countries, max 7% per Tier 2 country. For US companies, 50% of the compute must stay home.

Tier 2 companies face stricter rules: separate authorizations per country and compute caps.

The framework requires safeguards in Tier 2: cybersecurity standards, physical security, personnel vetting, and supply chain independence from Tier 3 countries. These protect against chip diversion, unauthorized access, and model weight theft.

U.S. and Tier 1 partners' compute providers will lead global AI diffusion with the greatest deployment flexibility. They maintain unrestricted deployment in Tier 1 and, through a one-time universal authorization, can deploy data centers across Tier 2.

The framework’s immediate impact may appear more dramatic than it actually is. The US already hosts the majority of global AI compute capacity, and US compute providers dominate the global market.

The framework’s primary effect will likely be on future infrastructure decisions, creating stronger incentives to develop new compute clusters within U.S. borders. It's in our shared interest to keep the biggest clusters secure.

Cloud computing remains widely accessible globally. While AI chips face broader controls, compute usage restrictions only apply to developing controlled models. Sensible move.

Today, we publish “Governing Through the Cloud: The Intermediary Role of Compute Providers in AI Regulation.”

— Lennart Heim (@ohlennart) March 13, 2024

We propose compute providers as an important node for AI safety, both in providing secure infrastructure and acting in an intermediary role for AI regulation, leveraging… pic.twitter.com/b6uR3gITqI

The risk of alternative AI ecosystems is mitigated by existing U.S. and Tier 1 advantages. U.S. technology leads across the entire AI stack.

China faces major constraints in AI chip production—both quality and quantity—due to existing semiconductor controls. Plus, countries make strategic partnerships based on broader factors like military cooperation and security guarantees.

Among notable AI models tracked by @EpochAIResearch, only 2 of 263 models (with known hardware) used Huawei Ascend chips, while 31 Chinese models used NVIDIA. That's out of 886 total tracked models.

— Lennart Heim (@ohlennart) January 2, 2025

The framework requires companies to cut Tier 3 ties for streamlined authorization—forcing a clear choice between AI ecosystems rather than allowing a foot in both camps.

While partnering with the U.S. AI ecosystem is compelling, the balance is crucial: too strict and countries might seek alternatives, too lax and security risks emerge. I think for now the choice is easy.

I discuss some limitations: model weight controls have limited value, the tier system lacks flexibility, and crucially, the framework cannot secure domestic AI infrastructure from foreign threats.

Give it a read! Paper here. For the busy folks, a two-page summary and summary table are in there. Thoughts welcome!