Navigating FLOP, FLOPs, FLOPS, FLOP/s, and PetaFLOP/s-days.

Summary

FLOP/s (floating point operations per second) refers to the computational performance of a processor, representing the number of operations executed per second (e.g., a processor can have a peak performance of X FLOP/s). Sometimes, people omit the "/" and simply say FLOPS.

In contrast, FLOP (floating point operations) denotes the quantity of operations (e.g., running a processor for a week results in a total number of Y FLOP being executed). Others have utilized PetaFlop/s-days, which is the same unit (without reducing the time unit).

When discussing the "training compute" of ML systems, one describes the total number of operations required to train the specific system. Therefore, FLOP is the corresponding metric.

Motivation

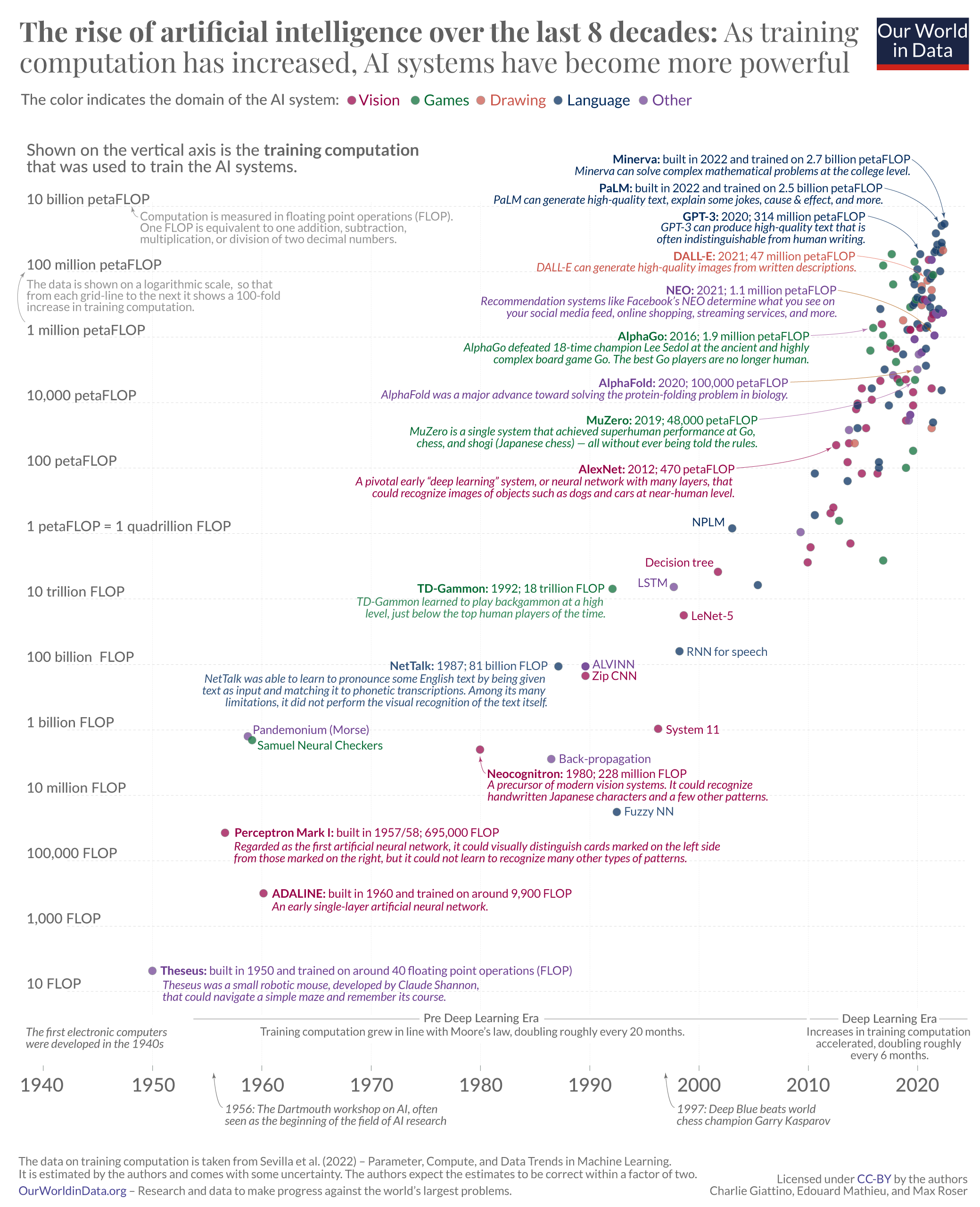

With the growing attention to AI, our paper "Compute Trends Across Three Eras of Machine Learning" has also gained prominence (yeah!), as it highlights the rapid progression of AI research. However, there has been some confusion concerning the metrics employed and, more specifically, what we actually measure. I think it is essential to understand these metrics, and I’m partially responsible for this confusion. So, let me try to clarify the confusion around FLOP, FLOPs, FLOPS, FLOP/s, and PetaFLOP/s-days to ensure accurate comprehension.

Clarifying the Terms

FLOP/s for performance

FLOP/s (floating point operations per second) refers to the computational performance of a processor, representing the number of operations executed per second. An operation, for example, is adding two numbers (a + b -> c) – so simplified, we're describing the number of, for example, arithmetic operations that get executed per second by the processor.

For example, an NVIDIA A100 performs 19.5e12 FLOP/s when processing a 32-bit floating-point number (FP32). The term FLOPS (without the "/") is also used to describe this metric. Less commonly and unfortunately, I have also seen FLOPs being used; it depends on the context.

FLOP for quantity

In our paper, we measured the amount of computational power, short compute, required to train a specific AI system (see the figure above), which is a measure of quantity, represented as FLOP (floating point operations). So we simply count how many of those basic operations the processor has executed – independent of the amount of time it requires. For example, running an NVIDIA A100, an AI accelerator, for a week totals 1.179e19 FLOP.[1] But you could also run a processor with double the speed for half the time – the amount of FLOP would be the same.

It's essential to understand that machine learning systems or models do not possess a "computational performance" in the traditional sense. Instead, we measure “how much” computational power has gone into the training stage of an ML system. I won’t dive into the details of why the training compute of an ML system is an important measure of AI progress; instead, I refer to the background section of this paper.

I previously used FLOPs — which I now see as a mistake — to indicate the plural of FLOP. For example, in our paper, we still used FLOPs instead of our now preferred term FLOP to refer to quantity. However, a couple of months ago, we decided to adopt FLOP for quantity and FLOP/s (with an explicit "/") for computational performance. This approach aligns with the nomenclature for GB (GigaByte), where we write 128 GB instead of 128 GBs.[2]

As for PetaFLOP/s-days, this metric also represents a quantity. It describes the number of days with a PetaFLOP/s performance (peta signifies 1e15). This usage makes it more evident that it represents a quantity or "a duration of a given performance."[3]

In summary, we have two categories:

- Performance: FLOP/s (preferred), FLOPS

- Quantity: FLOP (preferred), FLOPs, or PetaFLOP/s-days

Any M, G, T, P, E in front of these units indicate SI base units.

Additional Considerations

Architectural Differences: GPUs typically boast higher FLOP/s compared to CPUs due to their specialized, albeit less flexible, architecture. If a workload is better suited for a GPU, it will yield faster results. Tensor cores function based on a similar concept.

Number Precision: The precision of numbers, such as 16-bit (FP16) or 32-bit (FP32) floating-point representations, affects processing speed.[4] Lower precision numbers or integers are processed faster. In the context of integers, the term "OP/s" is often employed as a more general alternative.[5]

Conclusion

So let's stick with FLOP for quantity and FLOP/s for performance.

19.5e12 FLOP/s * 1 week * 7 days/week * 24 hours/day * 60 min/h * 60 s/min↩︎h/t Tamay ↩︎

For example, one PetaFLOP/s-day refer to a processor running with a computational power of one PetaFLOP/s running for one day.

A PetaFLOP/s-day equals 1e15 floating point operations per second for one day. A day has 86,400 seconds ≈ 1e5 seconds. Therefore, 1e20 FLOP. ↩︎It's worth noting that the recent US October 7th export controls implemented a measure that is somewhat agnostic to the specific bit length by using OP/s * bit. OP/s to describe computational performance independent of floating point or integer, and "* bit" to capture performance across various bit lengths. For example, a processor with X OP/s peak performance on FP16, would be measured by multiplying the performance X with 16. ↩︎

In the future, FLOP might be obsolete (at least for some workloads) when integer becomes the dominant number representation. Then it's OP and OP/s. ↩︎

FLOP for Quantity, FLOP/s for Performance

FLOP/s refers to the computational performance of an integrated circuit, representing the number of operations executed per second. In contrast, FLOP denotes the quantity of operations (e.g., running an NVIDIA A100 for a week results in a total number of X FLOP being executed).